How to Validate Real Estate Forecasting Models

Forecasting real estate accurately is challenging but essential. Mistakes can lead to costly errors like overpaying for properties or breaching debt covenants. To avoid this, models must be validated thoroughly using tailored methods that address the unique complexities of real estate data - like time sensitivity, geographic variation, and economic constraints.

Key steps include:

- Define clear forecasting goals: Break down objectives (e.g., rent growth or NOI projections) into measurable targets.

- Prepare and partition data: Clean missing values, handle outliers, and use chronological splits to avoid data leakage.

- Choose the right validation methods: Use k-fold cross-validation for static models and rolling-origin validation for time-series forecasts.

- Apply domain checks: Ensure results align with market realities like realistic rent growth, occupancy rates, and cap rates.

- Run scenario tests: Assess performance under different market conditions to gauge resilience.

- Monitor performance: Backtest forecasts regularly and track errors over time to maintain reliability.

The goal? Reliable forecasts that guide better decisions while minimizing risk. Tools like CoreCast can centralize data, automate validation, and simplify reporting to keep forecasts accurate and actionable.

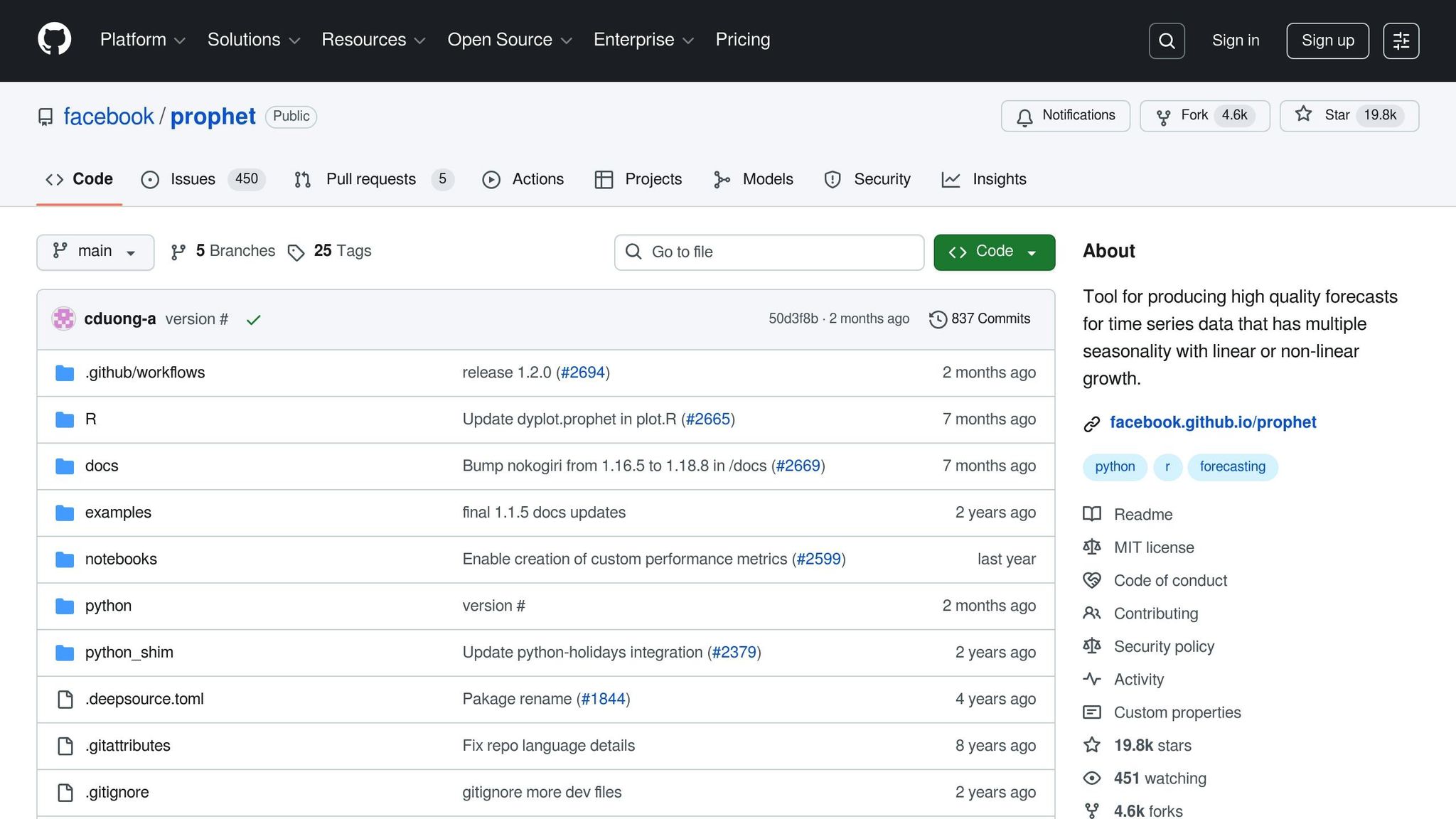

Time Series Forecasting Rental Prices with FB Prophet

Define Forecasting Objectives and Data Scope

Start by clearly defining what you aim to predict and the data required to make it happen. Your objectives will shape every decision that follows, from the algorithms you experiment with to the error metrics you choose and the level of accuracy you aim for. For instance, forecasting next quarter's rent growth for a Class A multifamily property in Austin requires a different validation approach than projecting five-year net operating income (NOI) for a national office portfolio. This clarity will guide your validation strategy, beginning with well-defined forecast goals.

Set Clear Forecast Goals

Transform broad business questions into specific, measurable targets. For example, if your investment team asks, "Can we achieve a 7% leveraged IRR on this acquisition?" break it down into detailed forecast components like projected rent per square foot, stabilized occupancy rates, operating expense ratios, and exit cap rates over the holding period. Each component should be expressed in precise units, such as dollars per square foot per year for rent or percentage points for occupancy. This level of detail helps you determine what level of accuracy is acceptable during validation. For example, you might require five-year NOI projections to remain within a specific margin of error to meet your target IRR under realistic scenarios.

Common forecasting objectives in U.S. real estate include:

- Rent Growth Models: Focus on year-over-year changes at the submarket level, typically over 12 to 24 months. Validation often involves metrics like mean absolute error (MAE) in dollars per square foot or mean absolute percentage error (MAPE).

- NOI Forecasts: Combine revenue and operating expense projections over several years. Validation ensures errors in key components, such as rent or expenses, don’t compound excessively when rolled up to NOI.

- Cap Rate Models: Due to their sensitivity to interest rates and transaction yields, these models require validation across various monetary policy scenarios.

- Occupancy and Absorption Forecasts: Useful for lease-up projections in new developments, these often need high-frequency metrics (weekly or monthly) during early stabilization, transitioning to quarterly projections as the property matures.

- Asset Valuation Models: Combine multiple forecasted inputs to estimate property or portfolio values. Validation should assess both point-estimate accuracy and whether the model’s uncertainty range aligns with actual market transaction spreads.

Your chosen forecast time horizon and level of detail also influence validation design. Monthly property-level forecasts work well for asset management and covenant monitoring, catching issues early, while quarterly portfolio-level forecasts are better suited for fund reporting and capital planning. Validation strategies should align with these choices, testing short-term accuracy for immediate needs and long-term stability for strategic planning.

Identify Data Dimensions

Once your forecast goals are set, define the data dimensions needed to support them. Create a minimum viable dataset, specifying required fields, units, time span, and quality standards.

Geographic Scope

Deciding on the geographic scope is critical. A national model captures broader trends when local transaction data is limited, but location-sensitive assets may need submarket or neighborhood-level models. During validation, test performance across geographic levels (e.g., metro and submarket) to identify where the model performs well and where adjustments might be needed.

Asset Class and Risk Profile

Segmenting by asset class (multifamily, office, industrial, retail) and risk profile (core, value-add, opportunistic) adds precision. Each segment has unique lease structures, volatility, and sensitivity to economic cycles.

| Risk Profile | Typical Characteristics | Validation Considerations |

|---|---|---|

| Core | Stabilized occupancy, long-term leases, lower volatility | Focus on steady cash flow accuracy and test sensitivity to minor cap rate fluctuations. |

| Value-Add | Moderate vacancy, active leasing, renovation risk | Validate lease-up timing, rent growth assumptions, and stress-test potential delays. |

| Opportunistic | High vacancy, significant repositioning, development risk | Stress-test outcomes under extreme conditions. |

Report performance separately by asset class and risk profile. For example, a model trained on stabilized multifamily properties may underestimate volatility in opportunistic office deals.

Essential Data Fields

For rent and NOI forecasting, key data fields include historical in-place rents, asking rents, lease start and end dates, concessions, vacancy and absorption data, operating expenses by category, and property characteristics like size, year built, and class. Specify units (e.g., dollars per square foot per year), the time span (ideally three to five years of history), and acceptable levels of missing data or outliers.

External Economic and Market Drivers

Incorporate data like local vacancy rates, job growth, household formation, construction activity, interest rates, inflation, and lending spreads. When integrating these factors, validation should test model performance under varying macroeconomic conditions, such as high-vacancy versus tight markets or rising-rate versus falling-rate periods.

A real estate intelligence platform like CoreCast can simplify this process by standardizing objectives and data dimensions across your portfolio. By centralizing property attributes, pipeline stages, portfolio analysis, and reporting in one place, CoreCast ensures consistent geographic and asset-class definitions, comparable data scopes across deals, and easy monitoring of validation results as your models evolve.

Balancing data scope is critical. A scope that’s too broad - like combining short-term lease-up assets with stabilized core holdings across unrelated metros - can lead to unstable error metrics during validation. On the other hand, a scope that’s too narrow may leave you without enough data for reliable statistical analysis. The goal is to group markets and assets with similar economic drivers while maintaining sufficient historical data for meaningful error estimates and stress tests.

With your objectives and data dimensions clearly defined, you’re ready to move on to preparing and partitioning your real estate data for validation.

Prepare and Partition Real Estate Data

Once you've outlined your forecasting goals and determined the scope of your data, the next step is preparing it for validation. This process is critical - it can mean the difference between generating reliable forecasts or ending up with misleading results. Real estate datasets often pull together information from various sources, including public records, MLS listings, property management systems, demographic databases, and macroeconomic indicators. Each source comes with its own quirks, like differing formats, update frequencies, and quality standards.

This preparation phase has two key parts: cleaning and standardizing your data for consistency, and partitioning it to respect the time-sensitive nature of real estate forecasting. Both steps are essential for ensuring that your model delivers realistic performance during validation.

Clean and Standardize Data

Real estate data is rarely perfect. It can include missing values, outliers, and inconsistencies in units or formats. Addressing these issues is essential to maintain the integrity of your analysis.

Handling Missing Values

Missing data is a common issue, whether it’s gaps in lease records or incomplete property details. For continuous data like monthly rents, you can use techniques such as forward-fill, backward-fill, or interpolation for short gaps. For datasets with interconnected features - like property details, neighborhood stats, or market indicators - model-based imputation methods (e.g., K-nearest neighbors or regression-based imputation) can be more effective. These methods take into account relationships between variables but require extra computational effort and careful validation to avoid introducing bias [2].

Keep a record of which fields have missing data, how significant the gaps are, and the methods you used to fill them. During validation, check if properties with imputed values behave differently compared to those with complete records.

Detecting and Treating Outliers

Outliers can stem from genuine market events (like distressed sales or luxury transactions) or simple data-entry errors. The interquartile range (IQR) method is a reliable way to identify them: calculate the 25th and 75th percentiles, determine the IQR, and flag values that fall more than 1.5 times the IQR below the 25th percentile or above the 75th percentile [2].

Before removing outliers, review them carefully. For example, a luxury property selling at an unusually high price might be an outlier statistically but still valid for certain market segments. If you confirm an outlier as an error, consider replacing it with a null value and applying interpolation to maintain data consistency [2].

Standardizing Units and Formats

To make comparisons easier, ensure that all monetary values are in U.S. dollars and express prices or rents in dollars per square foot ($USD/sq ft). Similarly, use square feet consistently for area measurements. Align time stamps to a uniform format, such as MM/DD/YYYY, with month-end dates working well for most forecasting tasks. When combining property-level data with broader market indicators (e.g., interest rates or employment data), ensure each variable is correctly lagged or aligned. For instance, if forecasting March 2025 rents, your model should only include economic data available through February 2025 or earlier [1].

Encoding Categorical Features

Real estate data often includes categorical variables, like property type (e.g., multifamily, office), building class (A, B, C), or construction material (e.g., brick, concrete). Since most machine learning algorithms require numerical inputs, these categories need to be converted. One-hot encoding is a common approach - it creates binary columns for each category (e.g., a column for "multifamily" and another for "office"), preserving distinctions without implying any order.

Performing Exploratory Data Analysis (EDA)

Before finalizing your dataset, conduct exploratory data analysis (EDA) to uncover trends, patterns, or remaining issues. Time-series plots can highlight seasonal variations - like a spike in residential sales during spring and summer. Histograms can reveal skewed distributions that might need transformation, while correlation matrices can identify relationships between variables that could impact model performance. Insights from EDA can guide decisions on modeling approaches, such as whether to use ARIMA for trend-driven data or SARIMA for datasets with strong seasonal patterns [2].

Create Time-Series Splits

After cleaning your data, partition it in a way that maintains its chronological structure. This step is crucial for ensuring that your performance metrics reflect the model's ability to forecast real-world scenarios.

Why Random Splits Don’t Work for Real Estate

A common mistake is treating real estate data as static and randomly splitting it into training and testing sets. This can lead to data leakage, where future information inadvertently influences the training process. For example, if your model trains on a mix of data from 2023, 2024, and 2025, and then tests on similar time periods, the results will likely be overly optimistic and not representative of the model's ability to predict genuinely unknown future periods [1][2].

Implementing Temporal Splits

To avoid this, sort your data by date and reserve the most recent 20–30% for validation and testing. Use the earlier 70–80% for training. This mirrors real-world deployment, where past data is used to predict future outcomes.

For example, if you have monthly data from January 2020 to December 2025, you could allocate January 2020 to June 2024 for training and July 2024 to December 2025 for testing. For more precise validation, create three sets: a training set (60–70% of historical data), a validation set (10–15% for tuning), and a test set (20–30% of the most recent data) [2].

Using Walk-Forward Validation

A single train/test split can give a quick performance estimate, but it may not be reliable across different time periods. Walk-forward validation offers a more robust approach by retraining and testing the model across multiple time windows. For instance, start with 36 months of historical data, train your model, and forecast the next month. Then, move forward one month, add that data to your training set, retrain, and forecast the following month. This method simulates real-world deployment and shows how the model performs under varying market conditions [2].

Preventing Look-Ahead Bias

When working with panel data (tracking multiple properties over time), ensure that your features only include information available at the forecast date. Use lagged variables to avoid look-ahead bias. For example, use prior-month occupancy rates to predict current-month rents, not the current values. Similarly, if forecasting Q2 2025 net operating income, restrict your model to economic data available through Q1 2025 or earlier. This ensures your validation results align with real-world scenarios [1].

With your data now partitioned chronologically, you’re ready to validate your forecasts under actual market conditions.

Select and Apply Validation Strategies

Once your data is cleaned and organized with a temporal structure, the next step is to pick a validation method that aligns with your forecasting goals. The choice of strategy depends heavily on the type of prediction you’re tackling - whether it’s a single-point property valuation or a time-series forecast for metrics like rents, prices, or occupancy rates. This decision is crucial because your validation approach directly impacts how well your model's performance reflects real-world conditions.

Choose Validation Methods

The validation technique you use should fit the nature of your forecasting problem. Static property valuations and time-series predictions require distinct approaches to avoid skewed performance results.

K-Fold Cross-Validation for Static Valuations

For models estimating property values at a specific point in time - like automated valuation models (AVMs) for homes or commercial property appraisals - k-fold cross-validation is a dependable option. This method divides your dataset into k equal parts (commonly 5 or 10 folds). The model is trained on k-1 folds and validated on the remaining fold, cycling through all folds to provide a reliable generalization estimate.

In real estate, k-fold validation needs some tweaks to handle unique data challenges. For example, group data by property or parcel ID to prevent duplicate entries from appearing in both training and validation sets, which is a common issue with MLS data. Stratifying data by market segments, such as price ranges, ensures a balanced distribution. Additionally, grouping by ZIP code or neighborhood can reduce the risk of spatial leakage, helping the model generalize better to new locations.

Rolling-Origin Validation for Time-Series Forecasts

When forecasting variables that change over time - like monthly rents, quarterly net operating income, or annual price trends - rolling-origin validation (also known as walk-forward validation) is the preferred approach. Unlike k-fold cross-validation, this method respects the chronological order of data, avoiding the mixing of past and future information.

Here’s how it works: Start with a training window, say five years of monthly data on Class B multifamily rents in Dallas. Train the model on this window and forecast the next month. Add that forecasted month to the training set, retrain the model, and predict the following month. Repeat this process to create a sequence of out-of-sample forecasts across various market conditions. This method mimics real-world deployment and highlights how the model performs under shifting market dynamics.

Simple Hold-Out Splits

For early-stage experimentation or when working with limited data, a simple hold-out split can suffice. Typically, 70–80% of the dataset is used for training, while the most recent 20–30% is reserved for testing. For instance, if your data spans January 2018 to December 2025, you might train on data from January 2018 to June 2024 and test on data from July 2024 to December 2025. Avoid splitting data randomly across time, as this can lead to data leakage and overly optimistic performance estimates.

With your validation strategy in place, the next step is to measure the model’s performance using error metrics.

Use Error Metrics for Real Estate Forecasting

Once you've chosen a validation method, you’ll need error metrics to evaluate how well the model performs. These metrics highlight different aspects of accuracy, and the best choice depends on the specific goals and audience for your forecasts.

Mean Absolute Error (MAE)

MAE calculates the average absolute difference between predicted and actual values, expressed in the same units as the target. For instance, if a model predicting single-family home prices has an MAE of $18,000, this means predictions are off by $18,000 on average per property. MAE is straightforward and particularly effective for communicating error magnitudes to stakeholders like investors and brokers. It’s also resistant to outliers, ensuring that extreme errors don’t overly distort the results.

Root Mean Squared Error (RMSE)

RMSE amplifies the impact of larger errors by squaring the differences before averaging. This makes it especially useful in scenarios where big mistakes can have outsized consequences - like rent forecasts for institutional portfolios. For example, between two models with similar MAE values, the one with a lower RMSE is less likely to produce large, costly errors. RMSE is often used to set risk thresholds, such as rejecting models with an RMSE above a certain level tied to financial performance metrics like internal rate of return (IRR).

Research in machine learning for real estate valuation has shown that Random Forest models achieve a mean absolute error of approximately 8.5% on test data, with a target accuracy of 5–7% often considered acceptable - comparable to typical realtor fees [3].

Mean Absolute Percentage Error (MAPE)

MAPE expresses errors as a percentage, making it ideal for comparing performance across different markets or asset classes. For example, a model predicting both $200,000 suburban homes and $2 million downtown condos might have similar MAE values for both, but the MAPE would reveal larger relative errors for lower-priced properties. However, MAPE becomes unreliable when actual values are very small or near zero, such as during early lease-up periods or for nominal rents in subsidized units. To address this, you can set a minimum threshold (e.g., only calculate MAPE for rents above $200) or pair it with MAE or RMSE for a more comprehensive evaluation.

| Error Metric | Units | Best Use Case | Key Advantage | Key Limitation |

|---|---|---|---|---|

| MAE | Same as target (e.g., USD) | Symmetric errors; dollar impact matters | Easy to interpret; robust to outliers | Treats all errors equally |

| RMSE | Same as target (e.g., USD) | Large errors are especially costly | Penalizes big mistakes more heavily | Sensitive to outliers |

| MAPE | Percentage (%) | Comparing across markets or asset classes | Enables scale-independent comparisons | Unstable when actual values are near zero |

Combining Metrics for a Complete Picture

Instead of relying on a single metric, use a mix of metrics across multiple validation runs. Track MAE, RMSE, and MAPE for each fold in k-fold validation or each window in rolling-origin validation. Look for models that perform consistently across different time periods, markets, and asset classes. For long-term planning, a model with slightly higher MAE but more stable performance across economic cycles (like rising interest rates or demand shocks) may be a better choice.

Tools like CoreCast simplify this process by centralizing historical property data, automating metric calculations, and enabling backtesting. This streamlined workflow helps ensure your real estate forecasts remain accurate and actionable in ever-changing market conditions.

sbb-itb-99d029f

Evaluate Model Accuracy and Stability

Once you've calculated error metrics across your validation runs, the next step is to interpret them. Metrics like Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) give you a sense of average prediction errors, but they don't always reveal deeper issues like model weaknesses or shifts in the market. To truly understand your model's performance, you need to dive into patterns and assess how reliable it is across various scenarios.

Analyze Performance Metrics

Breaking down forecasts by rent bands, geography, and time horizons can help uncover biases. Performance tables and visual tools (like histograms or heat maps) are especially useful for spotting systematic over- or under-predictions. For example, in multifamily portfolios, you might divide rent bands into categories such as:

- Under $1.50 per square foot per month

- $1.50–$3.00 per square foot per month

- Over $3.00 per square foot per month

For each segment, record metrics like MAE, RMSE, Mean Absolute Percentage Error (MAPE), bias (mean error), and observation counts. Similarly, assess performance by geographic indicators, such as Metropolitan Statistical Area (MSA), submarket, or ZIP code, and by forecast horizon. A model that works well for 3-month predictions might struggle with 12- or 24-month forecasts.

Bias is a critical factor to watch. If your model consistently overestimates rents in high-end markets or underestimates in low-rent areas, it could lead to flawed strategic decisions. To catch these trends, calculate the mean error for each segment and use visual tools like histograms, box plots of residuals, or heat maps by ZIP code. These can highlight areas where additional feature engineering or model adjustments may be needed.

When comparing models - say, ARIMA versus gradient boosting - look beyond average errors. A model with slightly higher MAE but much lower bias might still be the better choice if it avoids systematic mispricing. Always include a simple baseline model, like naïve year-over-year growth or linear regression, to confirm that added complexity is actually improving performance.

Tools like CoreCast simplify these evaluations by centralizing data and automating metric calculations. This allows you to compare forecasts to actual outcomes at the portfolio level, underwrite assets, track deal pipelines, and visualize performance by submarket or property type. This clarity helps pinpoint where models work well and where refinements are necessary.

The next step? Test how your metrics hold up under different market conditions to ensure the model's durability.

Test Stability Across Time Periods

Accuracy alone isn't enough - your model needs to remain stable across varying market cycles. A model that overfits to a specific market regime can easily fail when conditions change. Real estate markets go through cycles of expansion, contraction, shocks, and recovery, so testing stability over time is crucial.

Evaluate Performance Across Market Regimes

Segment your historical data into distinct periods, such as pre-2020, 2020–2021, and post-2021, and then calculate metrics like MAE, RMSE, MAPE, and bias for each period. A robust model should show only moderate performance dips during volatile times, without sudden spikes in errors or shifts in bias.

Use Rolling and Expanding Windows

Rolling window validation mimics real-world deployment by retraining the model sequentially and forecasting the next period. Expanding window validation, on the other hand, increases the training dataset over time. Plot metrics like MAE and RMSE over these windows to detect trends. For instance, a gradual increase in error could signal concept drift, indicating the need for updates to your model.

Watch for Structural Breaks and Drift

Real estate markets are prone to abrupt changes, whether due to policy shifts, major developments, or economic shocks. Keep an eye on error metrics over rolling windows (6–12 months) and check for significant changes in mean error, variance, or feature relationships. If you notice a sudden spike in MAPE or a new pattern of bias, it might mean your model's assumptions no longer hold. In such cases, you may need to refresh the training data, rework features, tweak hyperparameters, or even implement adaptive retraining strategies.

Tie Stability to Business Decisions

Stable models are essential for making sound business decisions. Even if a model has modest average errors, its ability to perform consistently across market cycles can make it a stronger foundation for strategic planning. When presenting stability results to stakeholders, focus on how consistent performance supports reliable decision-making and reduces risk in unpredictable conditions.

Apply Domain Knowledge and Business Constraints

While statistical validation is essential, it's only part of the equation when it comes to creating reliable forecasts. Integrating domain knowledge ensures that your projections not only hit the mark mathematically but also make sense in the context of real-world market dynamics. Even the most statistically sound models can produce forecasts that clash with practical realities. Real estate markets, for instance, are influenced by physical, economic, and regulatory factors that no algorithm can fully capture from data alone. Your validation process must confirm that projections align with market realities and operational constraints.

Here’s how you can ensure your forecasts stay grounded in the real world.

Perform Domain Checks

Domain checks act as reality checks for your forecasts, flagging results that contradict fundamental real estate principles. Begin by setting realistic boundaries for key variables using historical data, market insights, and input from industry professionals like brokers and appraisers. These boundaries act as guardrails, catching outputs that might be statistically plausible but operationally impossible.

Here are some critical variables to validate:

- Rent Growth: In most mature U.S. markets, annual rent growth typically stays within a few percentage points above inflation under normal conditions. A reasonable range might be between -5% and +8%, accounting for downturns and strong recoveries. Compare your forecasts to historical trends for the same metro area and asset class. Anything outside these bounds should raise a red flag.

- Occupancy: Stabilized multifamily and office properties usually maintain occupancy rates of 90%–95%. Set thresholds - like 95% for multifamily and 92% for office - and require manual review for forecasts exceeding these limits. For new developments, validate the lease-up pace against submarket absorption rates. For instance, if a model predicts a 200-unit property will go from 0% to 90% occupancy in six months, check whether that aligns with local market conditions.

- Cap Rates: Forecasted going-in and exit cap rates should align with historical ranges for the specific market and asset class. For example, if recent comparable sales indicate cap rates between 6.5% and 8.0%, but your model assumes a 5.0% exit cap rate, it’s likely overestimating value. Reverse-calculate implied prices from your forecast and compare them to recent transaction data.

- Operating Expenses: For multifamily properties, expense ratios generally fall between 30% and 55% of effective gross income. Compare your forecasts to historical operating statements and industry benchmarks, and flag any outliers.

Different asset classes require tailored checks. For example, office and industrial properties should account for factors like lease terms, rollover schedules, tenant improvement costs, and leasing commissions. A downtown office model should reflect typical lease lengths (five to ten years), downtime between tenants, and re-tenanting costs, which can range from $30 to $60 per square foot or more.

Platforms like CoreCast simplify this process by centralizing data on properties, portfolios, and markets. With tools like historical benchmarks and templated domain rules, CoreCast can automatically flag issues - such as occupancy rates exceeding 95% or cap rates below market norms - before forecasts are submitted to investment committees.

Visual checks are just as crucial as numerical ones. Plot trends for forecasted rents, occupancy, and values against historical data. Sudden spikes or sharp divergences from past patterns often signal domain violations that might not be apparent from metrics alone.

Once you’ve confirmed that forecasts are economically sound, the next step is to test how they hold up under different market conditions.

Run Scenario Tests

Even if a model adheres to domain constraints in normal conditions, it might falter when the market shifts. Scenario and stress tests help reveal how forecasts behave under various economic and market scenarios, ensuring the model doesn’t assume a perpetually stable environment.

Scenario tests involve creating alternative future scenarios, such as a base case, an upside case, and multiple downside cases. For each scenario, define assumptions about macroeconomic factors like interest rates, employment, GDP growth, and inflation, and observe how your model responds. For example:

- A base case might assume 3%–4% annual rent growth, stable occupancy, and a slight cap rate expansion of 25 basis points.

- An upside case could include stronger rent growth (5%–6%) and cap rate compression.

- Downside scenarios might feature rent declines of 2%–4%, slower lease-up rates, higher operating expenses, and cap rate expansions of 100–200 basis points.

Stress tests push specific variables to extreme but plausible levels. Key factors to stress include interest rates, unemployment, vacancy rates, and exit cap rates. For U.S. markets, interest rate stresses might simulate a 150–300 basis point increase in borrowing costs, similar to the shocks seen in 2022–2023. Unemployment stresses could add 2–4 percentage points, reflecting downturns like the 2008–2009 financial crisis. Vacancy stresses might assume a temporary drop in occupancy - say, from 92% to 80% over 12–18 months - to test cash flow resilience.

To implement these tests, input alternative economic paths into your model. For stress tests, apply scenarios like a vacancy dip in year two, a 150-basis-point cap rate expansion, or a 10% rise in operating expenses. Then, recalculate key metrics like net present value (NPV), internal rate of return (IRR), and debt service coverage ratio (DSCR) for each scenario.

Business constraints, such as lender covenants and investor return expectations, should also be part of these tests. Many lenders, for instance, underwrite deals to DSCR thresholds of 1.20x–1.40x and loan-to-value (LTV) ratios between 60% and 75%. If a modest interest rate hike causes DSCR to fall below 1.20x, this signals a financing risk that needs attention.

Time-based stress tests can be particularly revealing. Apply shocks at different points during the hold period - like an interest rate hike in year two or a vacancy spike in year three - to see how sensitive cash flows and covenants are to timing. Early shocks might be manageable if rents recover before exit, but late-stage shocks could severely impact returns.

When presenting scenario and stress test results to stakeholders, focus on actionable insights. Show how each scenario impacts IRR, equity multiples, downside protection, and covenant compliance. Highlight the assumptions driving the most significant outcome changes, so the team knows where to prioritize due diligence and risk management.

CoreCast streamlines these workflows by offering prebuilt scenario libraries and stress testing modules. You can configure templates for various asset classes - multifamily, office, industrial, retail - ensuring consistent application of domain checks and stress tests across your portfolio. CoreCast also enables you to integrate scenario results into polished reports for investment committees and capital partners, ensuring everyone works from the same set of assumptions and outcomes.

When a model passes statistical validation but fails domain checks - like predicting 120% occupancy or cap rates below any observed transactions - document the issue, investigate the cause (often feature leakage or model misspecification), and either adjust the model or override the forecast with a documented rationale. Governance materials should record what was flagged, who approved adjustments, and which business rules were applied. This transparency ensures that auditors, investors, and risk teams understand why certain statistically "better" models were set aside in favor of economically sound ones.

Domain checks and scenario tests aren't a one-and-done task. Use past case studies - both successes and failures - to refine your domain thresholds and stress parameters. Retrospectively applying current models to historical market periods, like regional downturns or rapid growth cycles, can reveal where forecasts deviated from actual outcomes. These lessons help define what "plausible but severe" looks like for specific U.S. markets and asset classes, improving the reliability of future validation efforts.

Operationalize and Monitor Forecasting Models

Validating a model doesn’t stop once it clears statistical and domain checks. The real challenge begins when it’s put to work in underwriting, portfolio management, or investor reporting. A model that performs well in backtesting may falter when market conditions shift, data pipelines break down, or new asset classes are introduced. To ensure long-term success, forecasting models must be integrated into repeatable, auditable workflows with continuous monitoring. Without this ongoing oversight, even the most well-tested model can lose its edge over time.

Let’s dive into how to backtest these models and keep them effective after deployment.

Backtest and Monitor Performance

Backtesting bridges the gap between validation and deployment. It answers a critical question: How would this model have performed in past decision-making scenarios? Unlike traditional train-test splits, backtesting simulates real-world forecasting by using only the data available at each historical decision point. For example, if you’re forecasting 12-month net operating income (NOI) for a multifamily property, backtesting involves generating forecasts as of each quarter over the past three years using only the data available at that time, then comparing those forecasts to the actual NOI realized 12 months later.

The walk-forward methodology is particularly effective here. At each historical date, the model generates forecasts for future periods and records errors as actual results come in. This approach uncovers patterns that might go unnoticed with a single static test. Pairing error metrics with business KPIs - like variance to budget, variance to pro forma IRR, and leasing forecast accuracy - helps translate technical performance into financial impact.

Monitoring performance shouldn’t be an afterthought. Establish tiered thresholds to catch issues before they affect deals. For instance, an early-warning threshold might be set at ±5% MAPE (Mean Absolute Percentage Error) for quarterly NOI at the property level, with a stricter ±2% band at the portfolio level. If the rolling six-month average error exceeds a 50% increase compared to the prior year, or if specific submarkets or asset classes show persistent bias, the model should be flagged for review. When thresholds are breached, a documented playbook should guide corrective actions, such as diagnosing data issues, re-estimating parameters, or segmenting the model by asset class or geography rather than scrapping it entirely.

Visualizing backtest results is key to understanding model behavior. Plot forecast versus actual outcomes for critical variables - like rent per square foot, occupancy, cap rates, and NOI - across properties, markets, and asset classes. Time-series charts can highlight whether errors cluster around specific events, such as Federal Reserve rate changes or seasonal leasing cycles. Error distributions can also reveal systematic biases. For example, if 70% of rent forecasts consistently fall below actuals, the model might be underestimating market strength, potentially leading to missed opportunities.

The importance of monitoring is backed by data: poor data quality accounts for about 62% of forecasting errors, while organizations with strong data quality controls can reduce error rates by roughly 37% [4]. This underscores the need to monitor not just the model but also the data pipelines feeding it. Keep an eye on input feature stability - ensuring rent rolls are timely, transaction comps are complete, and operating statements are consistently formatted. Alerts should flag when forecast errors or data quality metrics fall outside acceptable ranges.

Tailored views for different stakeholders enhance usability. Analysts benefit from technical dashboards showing error distributions by asset type, market, and forecast horizon, along with input feature stability and version comparisons. Asset managers need operational views that compare forecasted and actual metrics like NOI, occupancy, and capital expenditures, with filters for region, risk profile, or sponsor. Executives require portfolio-level summaries that link forecasted and realized cash flows in U.S. dollars, supplemented with scenario-based projections to guide capital allocation and fundraising.

Governance and documentation are the glue that holds everything together. A model registry should log versions, training data ranges, feature sets, and validation results. Document the model’s purpose - whether for underwriting stabilized multifamily properties or forecasting value-add office opportunities - along with assumptions like cap rate reversion or absorption speed and limitations such as weaker performance in thinly traded rural markets. For U.S.-based investors, using familiar formats like dollars, fiscal periods, square feet, and basis points makes the model more accessible for audits, investor relations, and regulatory reviews.

When human experts override forecasts - perhaps due to unique events or property-specific insights - documenting the rationale is crucial. If overrides frequently occur around certain deal types or markets, it could signal that the model needs recalibration or segmentation. This feedback loop ensures the model stays aligned with evolving business and market conditions.

Centralize Insights with CoreCast

To operationalize forecasts effectively, they need to be seamlessly integrated into broader business workflows. Relying on disconnected tools and spreadsheets can make it difficult to track which forecasts were used for specific deals, compare predictions to actual outcomes, or ensure consistent assumptions across teams. A centralized platform solves these issues by bringing forecasts, property data, deal pipelines, and portfolio performance together in one place.

CoreCast is designed for this purpose. Unlike traditional property management or bookkeeping systems, CoreCast supports the full investment lifecycle - from initial screening and underwriting to asset management and investor reporting. Users can underwrite any asset class or risk profile, monitor their pipeline through deal stages, analyze portfolios, and create branded reports for investors and partners - all within a single platform.

When forecasting models are integrated into CoreCast, projections are displayed alongside actual leasing, income, and sale performance, making variance analysis a routine part of workflows. For instance, if a model predicts 4% annual rent growth for a suburban office property, CoreCast will show this forecast next to the property’s actual rent roll and leasing activity. Asset managers can quickly assess whether the forecast holds up and adjust strategies if deviations occur.

CoreCast also tracks the entire deal and property lifecycle, linking specific model versions to each stage - from initial screening through post-close monitoring. This creates a comprehensive audit trail, showing which assumptions were used during underwriting, how they changed during due diligence, and how well they aligned with actual performance after acquisition. Over time, this data provides valuable insights into which markets, asset classes, or sponsors tend to outperform or underperform forecasts, helping refine future assumptions and risk assessments.

The platform’s robust portfolio analytics and reporting tools make it easy to package forecasts, backtests, and scenario analyses into dashboards and reports for investment committees and capital partners. Instead of exporting data to Excel or PowerPoint and reconciling numbers across multiple tools, users can generate branded reports directly from CoreCast, saving time and ensuring consistency.

CoreCast also integrates seamlessly with third-party systems like Buildium, QuickBooks, and RealPage. Operational data - such as rent collections, operating expenses, and capital expenditures - flows into CoreCast automatically, enabling users to compare forecasts with actual performance without manual data entry. For example, if a forecast assumes a 35% operating expense ratio but actual expenses hit 40%, CoreCast highlights the variance, prompting further investigation into whether the issue is temporary or signals a need for model adjustments.

Real-time updates ensure that as new data arrives - whether it’s a signed lease, an expense invoice, or a transaction comp - forecasts and variance reports are refreshed instantly. This centralized, dynamic approach ensures that validated forecasts stay aligned with market realities, enabling timely adjustments and better decision-making.

Conclusion

Validation isn't a one-and-done task; it's an ongoing process that spans from setting clear objectives to monitoring performance after deployment. The most dependable models are those that align closely with specific decision-making needs - whether you're underwriting a $15 million multifamily acquisition, determining leverage for a portfolio, or forecasting five-year exit values for a development project. Each scenario comes with unique demands, such as varying forecast horizons, error tolerances, and risk thresholds.

A critical component of this process is maintaining strong data integrity. Poor data quality is responsible for about 62% of forecasting errors, yet organizations that prioritize robust data quality controls can reduce those errors by nearly 37% [1]. Practices like thorough data cleaning and time-aware partitioning are essential for ensuring models perform well when applied to real-world scenarios. Choosing validation strategies and error metrics that reflect the financial stakes and reliability requirements of each decision is equally important. Metrics should be tailored to align with the size of the assets and the associated risks.

Domain expertise also plays a vital role in creating forecasts that are not only statistically accurate but also economically meaningful. Stress-testing scenarios - such as fluctuations in lease-up timelines, construction costs, interest rates, and absorption rates - keeps forecasts grounded in practical realities. This type of domain validation naturally leads to the necessity of ongoing performance tracking.

Continuous backtesting and monitoring finalize the validation loop. Comparing past forecasts to actual outcomes over six- or twelve-month intervals can reveal when models deviate due to market changes, data pipeline errors, or structural shifts. Establishing tiered thresholds - like flagging a review if MAPE exceeds 1.5 times the development benchmark - helps identify and address issues early, minimizing their impact on deals or investor confidence.

Centralizing these efforts within an integrated platform like CoreCast simplifies monitoring and reporting. Instead of managing a web of spreadsheets and disconnected tools, CoreCast allows you to track which model versions were used for specific deals, evaluate predictions against actual outcomes, and create polished reports for investment committees - all in one place. By integrating with property management systems, CoreCast can pull in real-time operational data to immediately flag performance discrepancies, ensuring that issues are addressed promptly. While this infrastructure doesn't replace the need for validation, it makes consistent, scalable validation achievable.

FAQs

What’s the difference between k-fold cross-validation and rolling-origin validation in real estate forecasting?

Both k-fold cross-validation and rolling-origin validation are popular methods for evaluating forecasting models. While they share the goal of assessing performance, they are tailored for different scenarios and applied differently.

- K-fold cross-validation involves dividing the dataset into several subsets (or "folds"). The model is trained on some folds and tested on the others, cycling through until every fold has been used for testing. This method ensures that each part of the data contributes to both training and testing, making it a solid choice for evaluating general accuracy.

- Rolling-origin validation is specifically suited for time-series data, such as tracking real estate market trends. It respects the chronological order by training the model on an expanding set of past data and testing it on future data. This approach closely mimics real-world forecasting, where predictions rely on historical patterns to anticipate future outcomes.

In real estate forecasting, rolling-origin validation is often the go-to method because it aligns with how predictions are made in practice - using past trends to inform future decisions.

How do domain checks and scenario testing enhance the reliability of real estate forecasting models?

Domain checks and scenario testing are essential for enhancing the reliability of real estate forecasting models. They ensure predictions are accurate, practical, and ready for real-world use.

Domain checks focus on verifying that the model's outputs match industry norms and real-world expectations. This process helps catch inconsistencies or unrealistic results, keeping the model aligned with actionable, real-world insights.

On the other hand, scenario testing examines how the model performs under various hypothetical conditions, like shifts in market trends or changes in economic factors. By simulating these scenarios, you can evaluate the model's ability to handle uncertainties and adapt to stressors. This not only strengthens the model but also increases confidence in its predictions. Together, these approaches ensure your forecasting models are well-prepared for practical applications.

Why is it crucial to regularly monitor and backtest real estate forecasting models after deployment?

Keeping a close eye on real estate forecasting models and testing them regularly is key to ensuring they stay accurate and dependable. Real estate markets are constantly changing, influenced by factors like economic fluctuations, policy updates, or unforeseen events, all of which can affect how well a model performs over time.

By routinely evaluating these models, you can spot errors early, fine-tune predictions, and maintain trust in your forecasts. This approach not only supports better decision-making but also helps minimize risks and adjust to shifting market conditions with greater ease.